Developing an AI agent for demonstration purposes is relatively simple. However, creating an AI agent capable of functioning with all the complications associated with real users, unstructured data, scaling, and business key performance indicators is much more difficult than creating a working prototype.

Many teams have demonstrated their ability to launch AI capabilities that are visually stunning, yet will fail when put into use in the real world. These applications may produce erroneous outputs, disrupt business processes, cause user frustration, and/or become prohibitively expensive to support. This article outlines how to develop a production-ready AI agent.

Start With a Clear Business Objective

Don’t begin with the model. Begin with the outcome.

You need to define: (1) the task that you want your agent to complete, (2) the person who will be using the agent, and (3) how you will measure the success of your agent.

Some good examples of measurable business goals are:

- Decrease support response times by 30%.

- Automate invoice extraction to achieve an accuracy of 98% or better.

- Create meeting summaries in less than 10 seconds.

It’s best to avoid setting a vague goal, such as improving productivity. If your goal cannot be measured, then the agent will not provide you with a measurable benefit.

Build an AI System, Not a Prompt

A production AI agent is more than a smart prompt. It is comprised of:

- Input Validation

- Context Retrieval

- Model Reasoning

- Tool Execution

- Output Validation

The model is just one of multiple components making up an AI agent. A model without the appropriate components around it will produce variable and unpredictable results. This is why professional generative AI development services focus on designing complete, production-ready systems.

Choose Appropriate Architecture

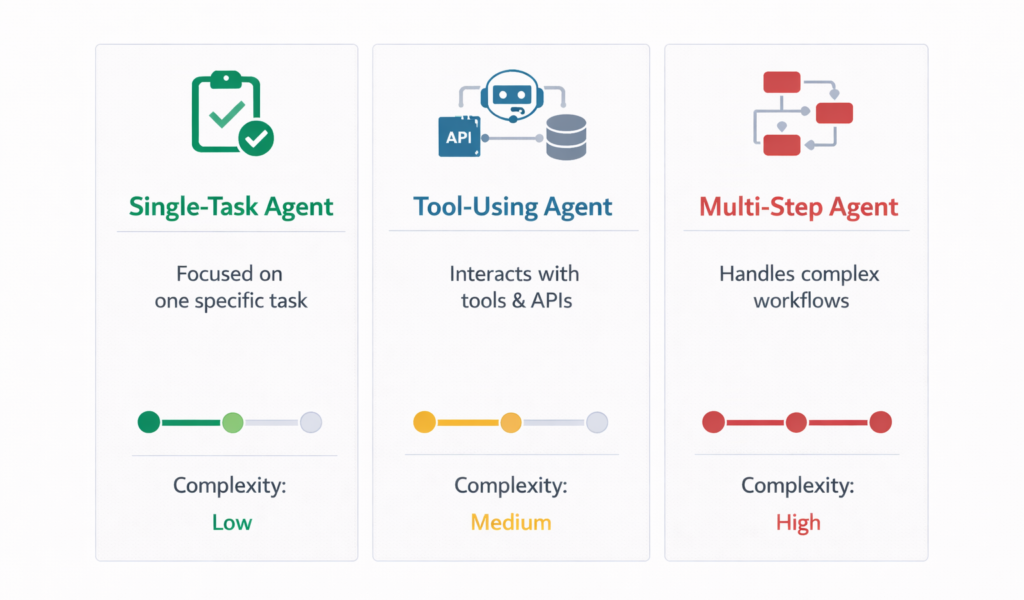

Not all bots need to be autonomous. Select an architecture appropriate for complexity regarding agents, including:

- Single-task agents (with narrow capabilities and predictable behaviors are generally easier to test).

- Tool-using agents (which typically involve interacting with APIs or databases (executing structured action sequences) and require strong error handling).

- Multi-step agents (that break tasks into smaller steps, maintain temporary memory, and provide greater degrees of power and also higher levels of risk).

Keep it simple. Add additional components to your agent only if required in order to successfully complete a task.

Ground the Agent in Trusted Data

Hallucinations are created when contextual information is unreliable.

Use:

- Retrieval-augmented generation (RAG)

- Sources of internal information that have been approved as valid

- Well-defined limits on the responses the agent will provide

Best practices:

- If an answer can be given from the retrieved context, provide it

- The agent may indicate if it does not know the answer

- Assign confidence thresholds

In production, accuracy matters more than creativity.

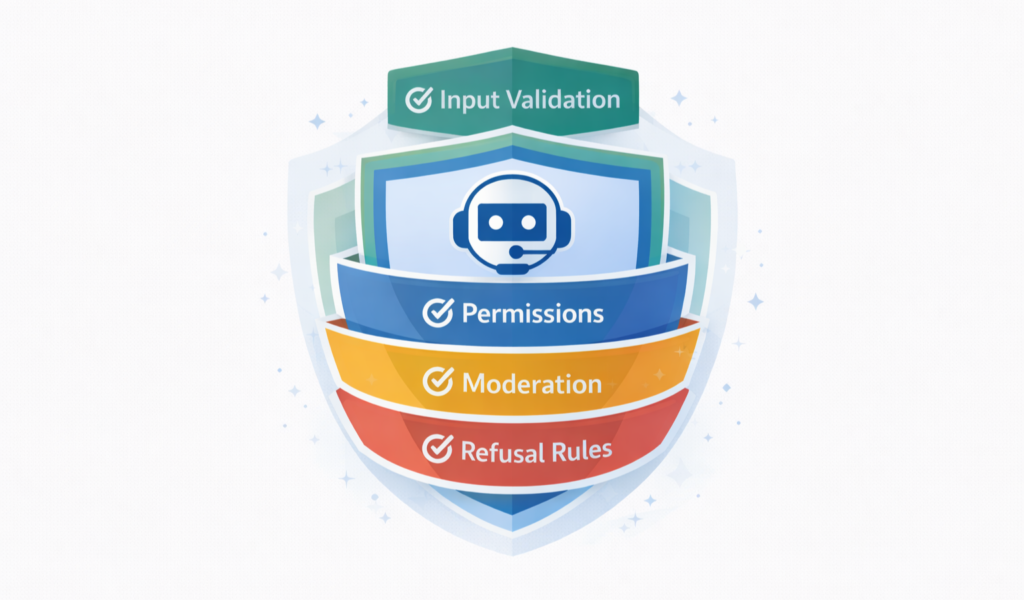

Implement Guardrails Early

Guardrails are designed to protect not only users but also your system.

Examples include:

- Sanitizing Input

- Detecting Prompt Injection

- Verifying Permission

- Moderating Content

You must also establish clear limits as to what an agent cannot do. AI created for production should have predictable and safe production.

Make Observability Your Goal

To make improvements, the first thing that needs to be done is to visually track that progress:

- Track inputs and outputs

- Track Overall Latency and Token Usage

- Track Failure Rates

- Track Failure Patterns

When possible, document the reasoning behind the logs in a way that will assist with future analysis. Keep track of an AI agent like any other service in production (do not treat it as a black box).

Test Edge Cases, Not Just Ideal Scenarios

Many failures occur outside of the generally accepted workflows, so also test for:

- Ambiguous instructions

- Missing data

- Adversarial inputs

- API outages

Automated testing should be augmented with human evaluation.

Cost and Performance Optimization

Technologically impressive agents that respond slowly or are too expensive will not be able to continue functioning.

Guidelines:

- Selecting the appropriate model for any given function

- Minimizing token usage

- Caching frequently requested queries

- Whenever possible, consider asynchronous processing

- Balance your agent’s IQ with the cost of performing an intelligent action.

Continuously Improve After Launch

After launching, you should dynamically improve the product. The deployment is not the last step in the development cycle; it’s the start.

Analyze:

- Where does the user fix the bot?

- What responses are broken?

- What questions create a breakdown?

Continuously refine prompts, logic, and data sources. Production-ready artificial intelligence is an evolving development environment.

Wrapping Up

Successful AI agents in production are defined not by hype, but by structure, constraints, reliable data, and measurable outcomes. Winning teams see their AI agents as software applications and engage in monitoring, testing, and iterative improvement. This is what separates the impressive demonstration from actual business results.